Azure Functions – Microsoft’s Function-as-a-Service solution, have been growing in popularity since their release way back in 2016. They are simple to use, scalable, versatile (especially with newer developments such as Durable Azure Functions). They also seamlessly integrate with the rest of the Azure ecosystem. This means you’ll probably end up working with them sooner rather than later (not to mention the free tier).

Debugging Azure Functions Locally

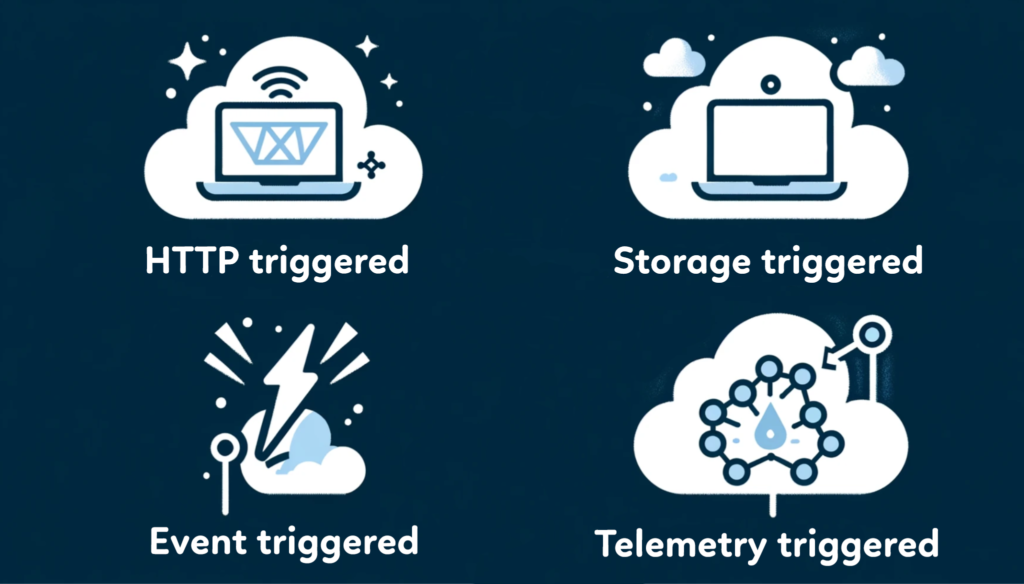

However, working with them locally can, depending on your use case and the function trigger, pose significant challenges. If your Azure functions are HTTP-triggered, getting them to execute locally is quite easy. For most storage-related triggers, you can use the out-of-the-box local storage emulator. But what about when your architecture is event-driven, and your Azure Functions are triggered by an Event Hub? Or you’re working on an IoT solution, and your functions listen to and process telemetry events?

Event-Driven Azure Functions: Event Hubs for Local Testing

In event-driven systems, you can easily get away with spinning up an Event Hubs instance and using the real Azure infrastructure to execute your Azure Function for debugging purposes. You “only” have to create the Event Hub and update the connection strings (or hook up permissions so you can use the DefaultAzureCredential) and remember to clean it up once you finish the project. I would recommend one Event Hub per each developer involved. You don’t want to be stepping on anyone’s toes or getting others’ replay/redelivery attempts.

Local development with Azure IoT Hubs

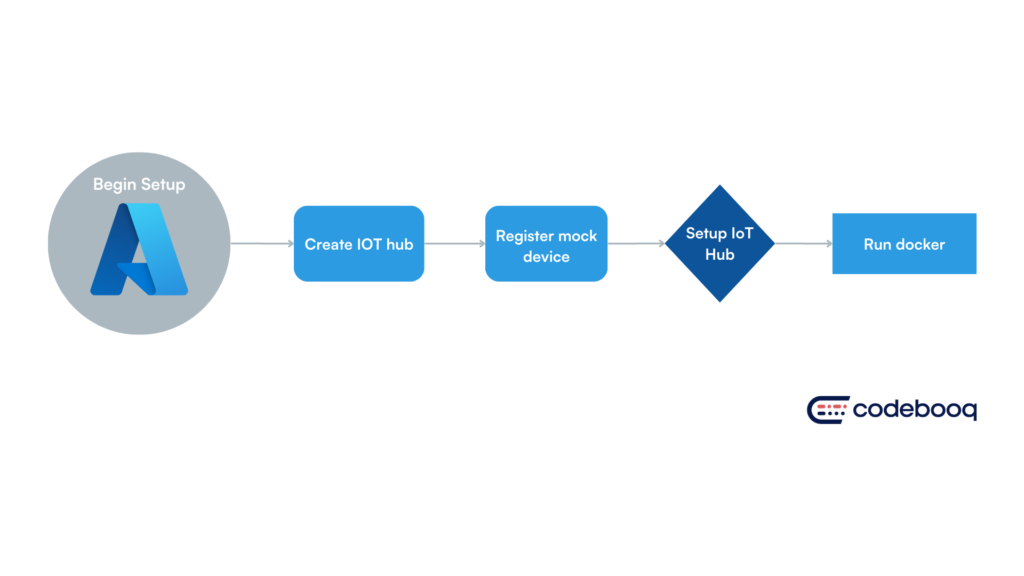

If your architecture has more moving pieces, like an IoT hub (which is either wired up to an Event Hub, or you opted for the Event Hub compatible endpoint), you’ll want to create an IoT hub instance, register your mock device there (Azure IoT Explorer will be your friend), run Docker and go wild with the Azure IoT Device Telemetry Simulator. Piece of cake, right?

Using administrator endpoints in Azure Functions – The not-so-obvious solution

One cool fact about Azure Functions is that they come with their own set of administrator endpoints. They allow you to manually execute functions via an HTTP request regardless of their trigger.

Using administrator endpoints in production is a horrible idea from a security perspective. Invoking these endpoints would require the caller to have a master key for authentication. However, using them locally really simplifies the process of getting the functions to trigger. Hopefully, you have encapsulated your application logic related to event emitting within a service method. It might look something like this:

private readonly EventHubProducerClient client;

…

public async Task EmitAsync<T>(T payload)

{

var eventData = new EventData(JsonSerializer.Serialize(payload));

await client.SendAsync(new EventData[] { eventData });

}

You can then re-implement your service with a method:

private readonly HttpClient client;

private readonly string adminEndpoint;

…

public async Task EmitAsync<T>(T payload)

=> await client.PostAsync(adminEndpoint, SerializeContent(payload));

private static StringContent SerializeContent<E>(E content)

=> new(JsonSerializer.Serialize(new

{

input = JsonSerializer.Serialize(content)

}), Encoding.UTF8, MediaTypeNames.Application.Json);

Let’s take a closer look at what happened here. We take our payload, serialize it into a JSON object, create a new (anonymous) object with the input property, assign it the serialized payload and finally create a request content out of that object.

Now, you just need to conditionally register either the local or the ‘real’ event emitting implementation based on your environment. And voilà – you’re all set. Or are you?

If your function is bound to an Event Hub, the function declaration contains the event hub connection details, either directly or by fetching them from a configuration:

[Function(nameof(SampleFunction))]

public void SampleFunction([EventHubTrigger("%EventHubName%",

Connection = "EventHubConnection")] EventPayload[] input)If you lack a valid, working event hub reference, starting the function will trigger one of these runtime errors. They are alerting you that it can’t locate the specified event hub instance:

Microsoft.Azure.WebJobs.Host: Error indexing method 'Functions.SampleFunction'. Microsoft.Azure.EventHubs: Value cannot be null. (Parameter 'connectionString') Microsoft.Azure.WebJobs.Host: Error indexing method 'Functions.SampleFunction'. Microsoft.Azure.EventHubs: Value for the connection string parameter name 'myConnectionString' was not found. (Parameter 'connectionString'). The listener for function 'Functions.SampleFunction' was unable to start. Microsoft.WindowsAzure.Storage: No connection could be made because the target machine actively refused it. (127.0.0.1:10000). Since needing a working event hub defeats the purpose of our approach, we’ll use a nifty little workaround. By disabling the function in local.settings.json, it won’t try and resolve the connection details, since it won’t be listening to event hub events, but its administrator endpoints will be up and running:

"Values": {

…

"AzureWebJobs.SampleFunction.Disabled": true,

…

}

Congratulations! You may now trigger the function locally!

The Limitations of Administrator Endpoints in Azure Functions

Administrator endpoints provide a clean and easy way to execute Azure Functions. However, there are scenarios in which this approach is not suitable. Since we are effectively removing Event Hubs from the equation, the parallelization and partitioning we usually take for granted are not available in this local approach. The HTTP requests will work on a first-come-first-served basis.

Event batching, a native functionality of Event Hubs SDKs, apparently lacks support in administrator endpoints, meaning you can’t send a batch of events through them. If this is crucial for your debugging scenario, stay tuned to these open issues, since this will prevent you from locally reproducing high-throughput scenarios.

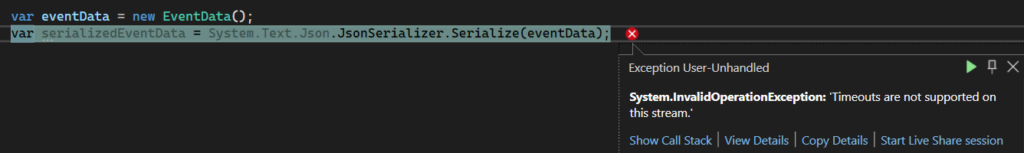

EventData Object Limitations

The last, but perhaps the most significant shortcoming is related to data binding. If your Azure Function input is bound to a custom type or a string, sending the event payload via HTTP request works swimmingly. However, if you need the entire EventData object, that will require modifying your Azure function code. Looking at the EventData class anatomy, it quickly becomes clear that this object is not quite JSON-friendly and thus you can’t pass it to the administrator endpoint.

If your code depends on it (maybe you rely on metadata such as event source or a timestamp), you might want to wrap these values in a custom property and construct the EventData object somewhere in the Azure Functions pipeline.

If you’re using the isolated worker process approach (and I hope you are), you can conditionally register a middleware that will, in your dev environment, modify the function context by creating the EventData object with the desired metadata.

On the other hand, if you’re working with the in-process model, your best bet might be function filters. They expose and allow the manipulation of the function context and, despite being in preview for a few years now, seem to work reliably.

Embracing Azure Functions for Streamlined Development

Embracing the tools and techniques discussed here arms you with the resources to navigate local development in the Azure ecosystem. Whether it’s handling event-driven architectures or integrating IoT solutions, the knowledge you’ve gained here will serve as a valuable asset in your developer toolkit. Stay curious, stay adaptable, and let Azure Functions unlock new potentials in your cloud computing endeavours. Happy coding!

All the examples are available in this GitHub repository.